MLP Neural Network in Regression

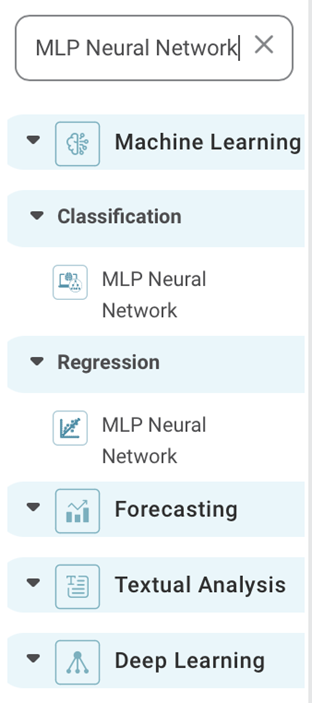

The MLP Neural Network is located under Machine Learning in Regression, on the left task pane. Alternatively, use the search bar for finding the MLP Neural Network algorithm. Use the drag-and-drop method or double-click to use the algorithm in the canvas. Click the algorithm to view and select different properties for analysis.

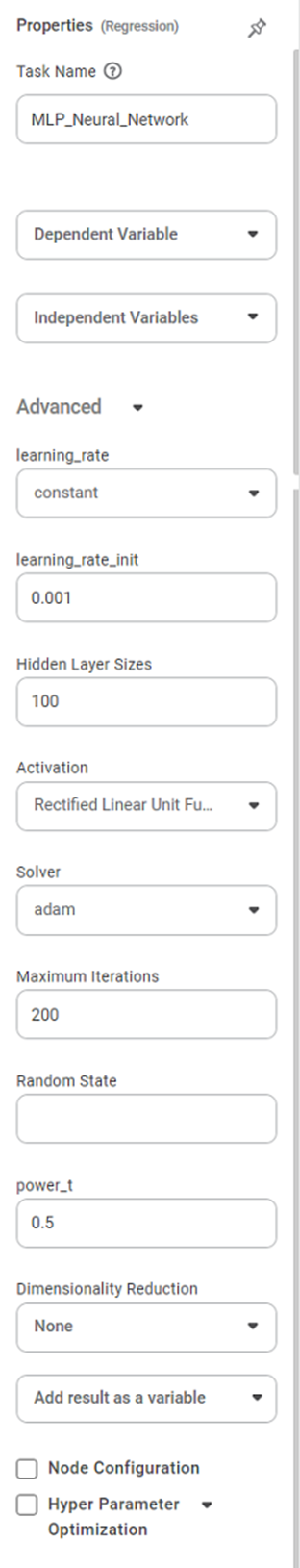

Properties of MLP Neural Network in Regression

The available properties of MLP Neural Network are as shown in the figure given below:

Related Articles

Classification

Notes: The Reader (Dataset) should be connected to the algorithm. Missing values should not be present in any rows or columns of the reader. To find out missing values in a data, use Descriptive Statistics. Refer to Descriptive Statistics. If missing ...Model Compare

Working with Model Compare To start working with Model Compare, follow the steps given below. Go to the Home page and create a new workbook or open an existing workbook. Drag and drop the required dataset on the workbook canvas. In the Properties ...Poisson Regression

Poisson Regression is located under Machine Learning () under Regression, in the left task pane. Use the drag-and-drop method to use the algorithm in the canvas. Click the algorithm to view and select different properties for analysis. Refer to ...Linear Regression

Linear Regression is located under Machine Learning ( ) in Regression, in the task pane on the left. Use the drag-and-drop method to use the algorithm in the canvas. Click the algorithm to view and select different properties for analysis. Refer to ...Polynomial Regression

Polynomial Regression is located under Machine Learning () under Regression, in the left task pane. Use the drag-and-drop method to use the algorithm in the canvas. Click the algorithm to view and select different properties for analysis. Refer to ...