Model Compare

Working with Model Compare

To start working with Model Compare, follow the steps given below.

- Go to the Home page and create a new workbook or open an existing workbook.

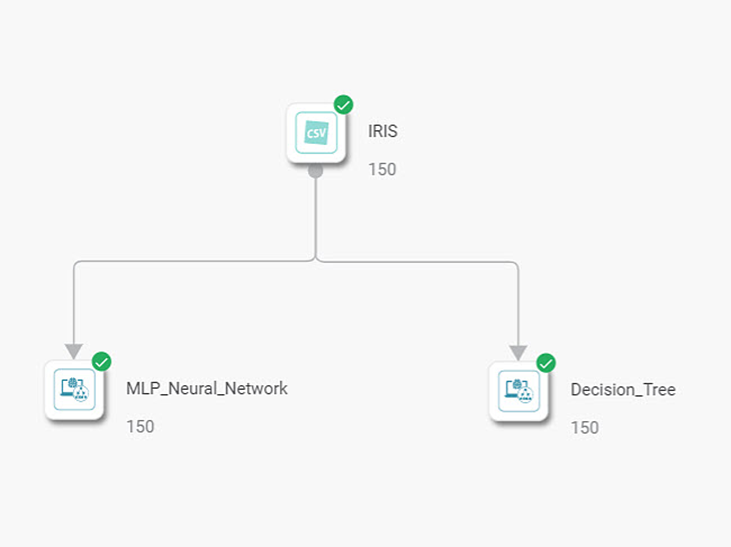

- Drag and drop the required dataset on the workbook canvas.

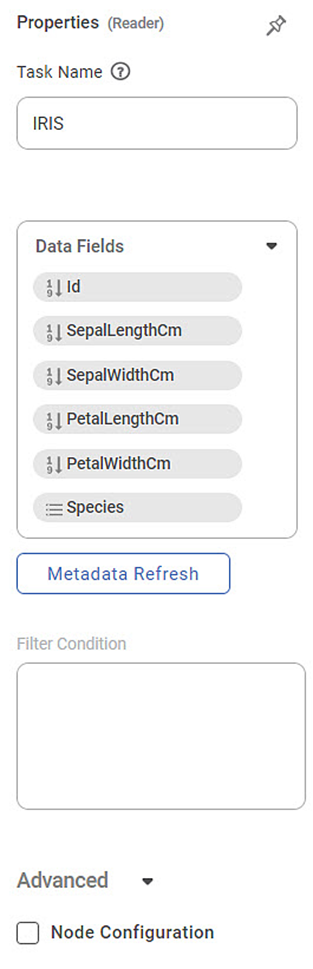

- In the Properties pane, select the Data Fields from the dataset.

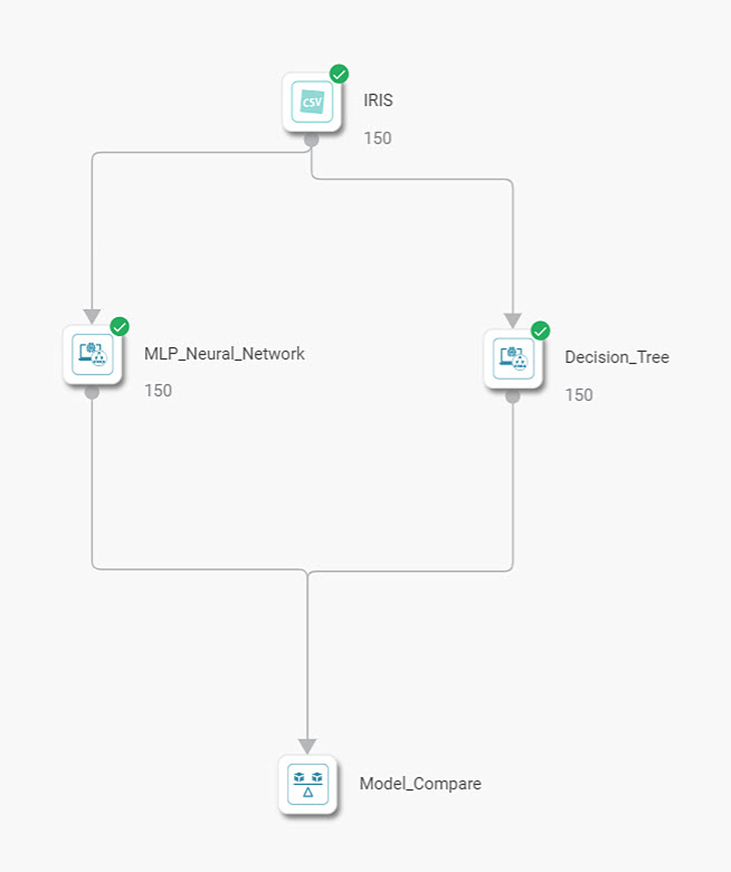

- Select the classification or regression algorithm that you want to compare and connect it to the dataset node.

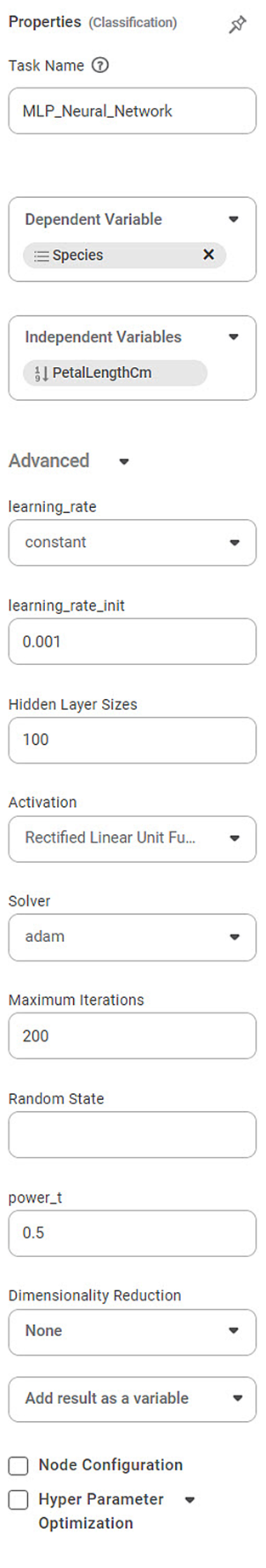

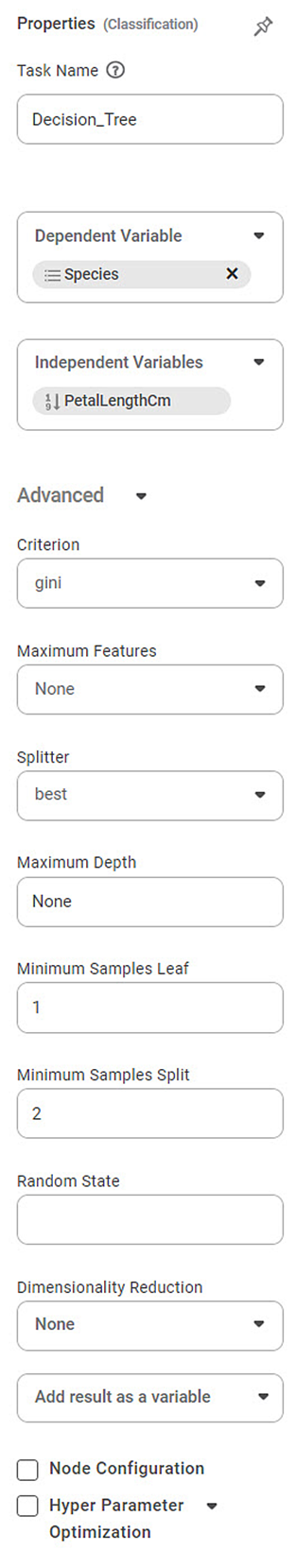

- Select the algorithm node and select its respective Properties displayed in the Properties pane.

Here, we have selected the Dependent and Independent variables, and Advanced Properties of MLP Neural Network algorithm.

- Click the algorithm and then click Run.

Repeat steps 4 to 6 for all the algorithms that you want to compare.

Here we have selected Decision Tree.

Note:

Make sure you select either the Classification models or Regression models to compare. You cannot compare a Classification model to another Regression model.

- Drag and Drop Model Compare on the workbook.

- Connect the selected algorithms to Model Compare.

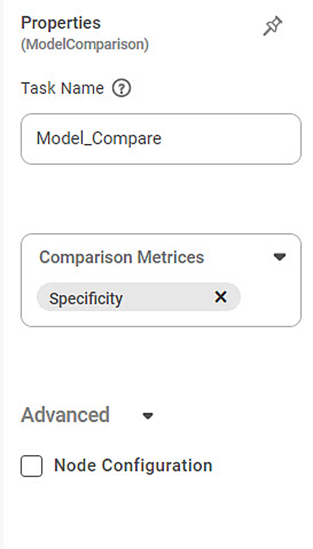

Select the Comparison Metrices for Model Compare.

Note:

For Regression models, the Comparison Metrices are –

- RMSE

- Adjusted R Square

- R square

- MSE

- MAE

- MAPE

- AIC

- BIC

For Classification models, the Comparison Metrices are –

- Accuracy

- Specificity

- Sensitivity / Recall

- F-Score

- Precision

- AUC

If cross validation is added as a predecessor to any of the algorithms, the comparison metrices are Mean accuracy and Standard Deviation accuracy.

If Train Test Split is used as a predecessor to the algorithms, then the algorithms are compared based on Test data.

- Select the Model Compare node, then click Run.

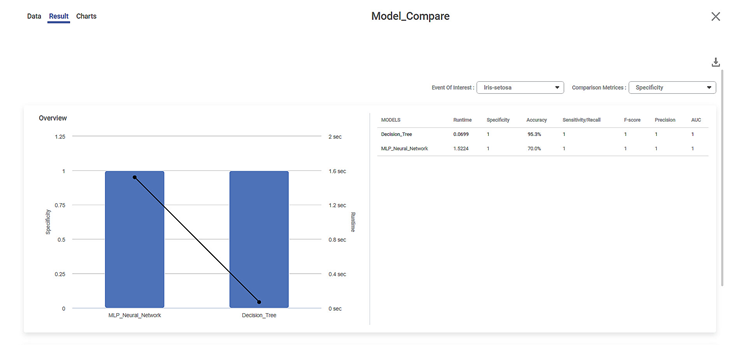

The node execution starts and after completion, a confirmation is displayed. - After the Model Compare node execution is complete, Click Explore.

The result page is displayed.

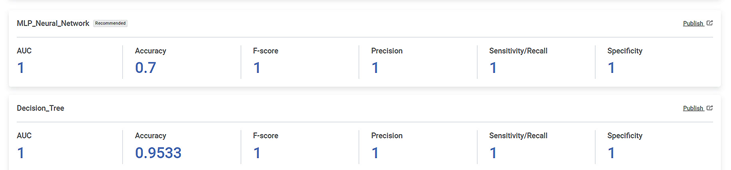

The result page displays the metrices of both the models sorted on the Performance Metrices.

As seen in the above figure, in this example, the recommended model is Decision Tree.

Related Articles

Data Compare

Data Compare is located under Model Studio ( ) in Data Preparation, in the task pane on the left. Use the drag-and-drop method to use the algorithm in the canvas. Click the algorithm to view and select different properties for analysis. In the Data ...Model Validation

Model validation is an enhancement of publishing a model. You can use this feature to explore the result of the published model for a selected dataset. In model validation, you can use the published model for the selected algorithm with the same ...Working with Model Studio

What is Model Studio Model Studio is a visual model designer for data scientists. It helps to build, train, test, deploy, and publish data models. These published data models can be reused whenever required. This enables you to maintain multiple ...Configuring RubiAI Model in Administrator Application

RubiAI Model Configuration allows administrators to connect Rubiscape with Large Language Model (LLM) providers such as Gemini. Once configured, RubiAI features become available across Rubisight and Rubistudio for Designer Assistant, widget insights, ...MLOPS - Machine Learning Operations

Introduction: Why Rubiscape MLOps? Rubiscape MLOps provides an end-to-end environment for building, tracking, publishing, and serving machine learning models. It ensures experiment reproducibility, streamlined deployment, and centralized model ...