Model Validation

Model validation is an enhancement of publishing a model. You can use this feature to explore the result of the published model for a selected dataset. In model validation, you can use the published model for the selected algorithm with the same advanced parameters, to explore the result for different datasets.

While training and testing an algorithm, you get a trained model upon training an algorithm. The trained model is tested on different datasets. Using this feature, you can validate the trained and published model to explore the result for a selected dataset. In Rubiscape, different versions of trained models are created depending on the number of times the algorithm is trained for the selected dataset. Using model validation, you can also validate each version of a published model. You can then choose the version of the published model which performs better for the selected dataset.

For publishing a model, refer to Publishing a Model.

To validate the published model on a different dataset, follow the steps given below.

- On the home page, click Models.

Recent published Models for the selected workspace are displayed. - Hover over the Model that you want to validate and click the Model tile.

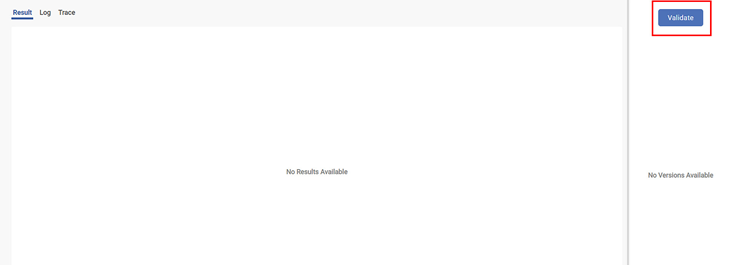

The Result page for the published model is displayed. In the right pane, click Validate.

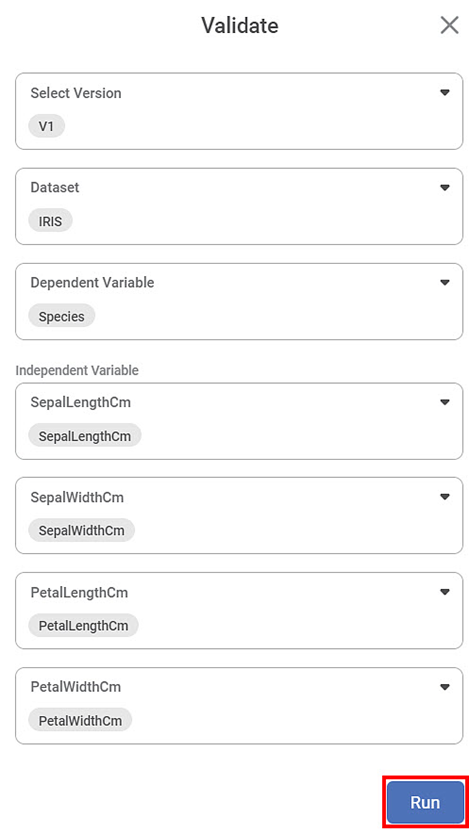

Validate page is displayed.

- Click Select Version and select a version of the published model to validate.

- Select a dataset.

Select the Dependent Variable and the Independent Variables for the dataset.

Notes:

- The properties displayed on the Validate page are dependent on the model you select and may be different from the figure below.

- You do not need to select the advanced parameters again for the selected dataset when using this feature, because the same algorithm from the published model is run for the selected dataset.

Click Run.

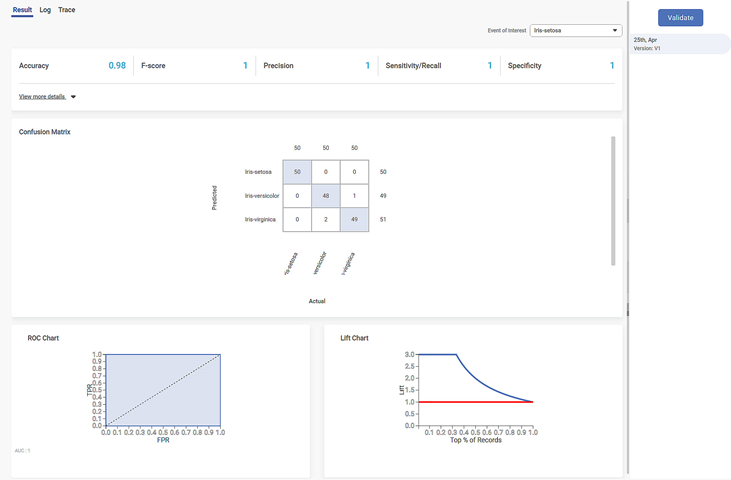

The Result page for the selected dataset is displayed.

Upon validating the published model, the Result page is displayed with different values, since the selected dataset is different.

Related Articles

Model Compare

Working with Model Compare To start working with Model Compare, follow the steps given below. Go to the Home page and create a new workbook or open an existing workbook. Drag and drop the required dataset on the workbook canvas. In the Properties ...Workbook Validation

In Rubiscape, you can drag-and-drop algorithms and datasets on the workbook or workflow canvas to build a model. When you run the model, Rubiscape validates it before execution. The validation feature is used to notify the validation errors that ...Workbook Validation

In Rubiscape, you can drag-and-drop algorithms and datasets on the workbook or workflow canvas to build a model. When you run the model, Rubiscape validates it before execution. The validation feature is used to notify the validation errors that ...Cross Validation

Cross Validation is located under Model Studio () under Sampling, in Data Preparation, in the left task pane. Use the drag-and-drop method to use the algorithm in the canvas. Click the algorithm to view and select different properties for analysis. ...Cross Validation

Cross Validation is located under Model Studio () under Sampling, in Data Preparation, in the left task pane. Use the drag-and-drop method to use the algorithm in the canvas. Click the algorithm to view and select different properties for analysis. ...