Random Forest Regression

Random Forest Regression is located under Machine Learning (  ) > Regression > Random Forest Regression

) > Regression > Random Forest Regression

Use the drag-and-drop method (or double-click on the node) to use the algorithm in the canvas. Click the algorithm to view and select different properties for analysis.

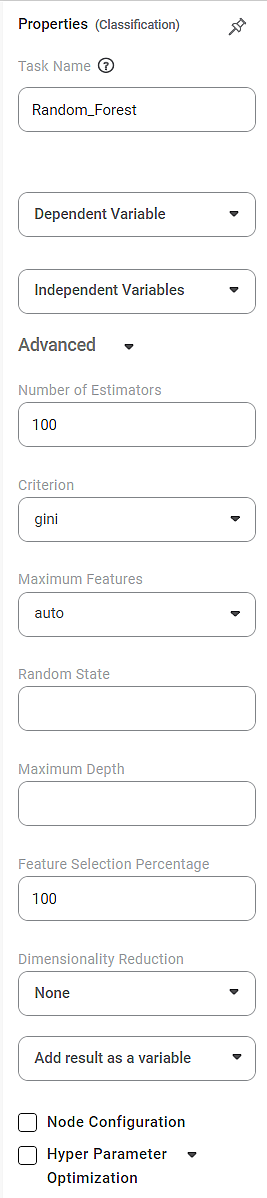

Properties of Random Forest Regression

The available properties of the Random Forest Regression are as shown in the figure below.

Related Articles

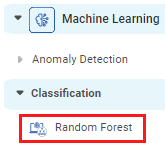

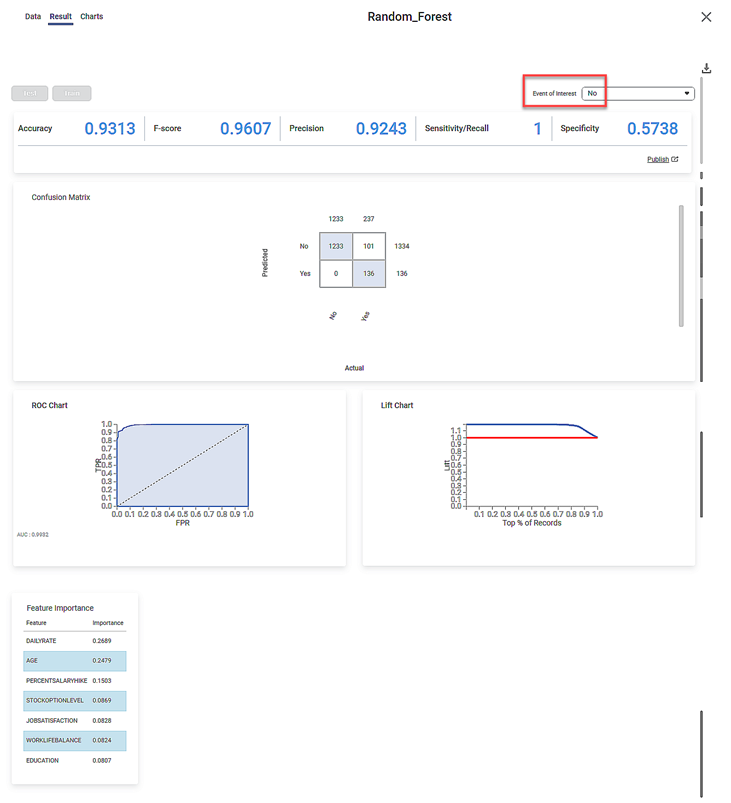

Random Forest

Random Forest is located under Machine Learning ( ) in Classification, in the left task pane. Use the drag-and-drop method (or double-click on the node) to use the algorithm in the canvas. Click the algorithm to view and select different properties ...Lasso Regression

Lasso Regression is located under Machine Learning ( ) in Regression, in the left task pane. Use the drag-and-drop method to use the algorithm in the canvas. Click the algorithm to view and select different properties for analysis. Refer to ...Ridge Regression

Ridge Regression is located under Machine Leaning ( ) under Regression, in the left task pane. Use the drag-and-drop method to use the algorithm in the canvas. Click the algorithm to view and select different properties for analysis. Refer to ...Decision Tree Regression

Decision Tree Regression is located under Machine Learning ( ) > Regression > Decision Tree Regression Use the drag-and-drop method (or double-click on the node) to use the algorithm in the canvas. Click the algorithm to view and select different ...Binomial Logistic Regression

Binomial Logistic Regression is located under Machine Learning () in Data Classification, in the task pane on the left. Use drag-and-drop method to use the algorithm in the canvas. Click the algorithm to view and select different properties for ...