Adaboost

Adaboost is located under Textual Analysis (  ) in Classification, in the left task pane. Use the drag-and-drop method to use the algorithm in the canvas. Click the algorithm to view and select different properties for analysis.

) in Classification, in the left task pane. Use the drag-and-drop method to use the algorithm in the canvas. Click the algorithm to view and select different properties for analysis.

Refer to Properties of Adaboost.

Properties of Adaboost

The available properties of Adaboost are as shown in the figure below.

The table below describes different fields present on the properties of Adaboost.

Field | Description | Remark | |

| Run | It allows you to run the node. | - | |

| Explore | It allows you to explore the successfully executed node. | - | |

| Vertical Ellipses | The available options are

| - | |

Task Name | It is the name of the task selected on the workbook canvas. | You can click the text field to edit or modify the task's name as required. | |

Dependent Variable | It allows you to select the dependent variable. | You can select one variable. | |

Text | It allows you to select the text variable. | The default value is eight (8). | |

Advanced | Random State | It allows you to select a random combination of train and test for the classifier. |

|

Learning Rate | It allows you to select the weight value for the learning rate of weak learning algorithms. |

| |

Number of Estimators | It allows you to select the number of weak learners to be created while running the algorithm. |

| |

Algorithm | It allows you to select the boosting algorithms for classification. There are two types of boosting algorithms. · Discrete boosting algorithm · Real boosting algorithm |

| |

Add result as a variable | It allows you to select any of the result parameters as the variable. | You can select from the following performance parameters of SVM:

| |

Node Configuration | It allows you to select the instance of the AWS server to provide control on the execution of a task in a workbook or workflow. | For more details, refer to Worker Node Configuration. | |

Hyperparameter Optimization | It allows you to select parameters for optimization. | For more details, refer to Hyperparameter Optimization. | |

Example of Adaboost

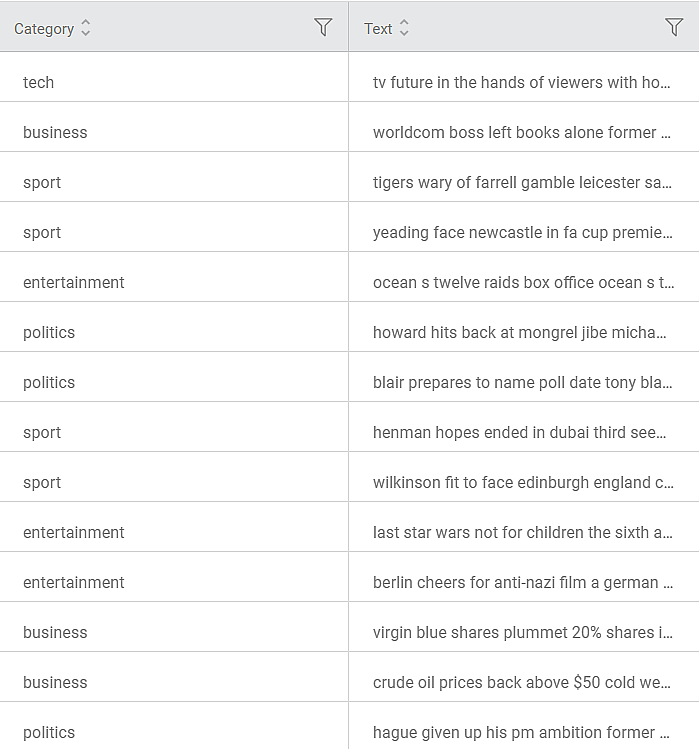

Consider a dataset containing BBC news snippets classified into different categories like tech, business, sport, politics, and so on.

A snippet of input data is shown in the figure given below.

We select the following properties and apply Adaboost.

Dependent Variable | Category |

Text | Text |

Random State | None |

Learning Rate | 0.1 |

Number of Estimators | 5 |

Algorithm | SAMME |

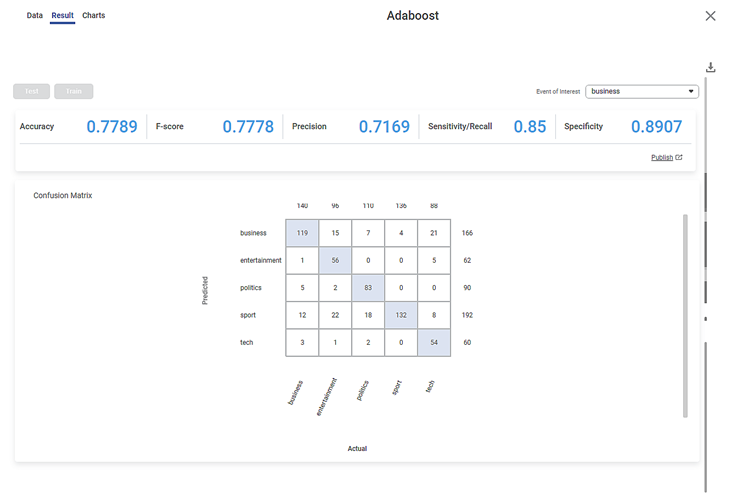

The result page is displayed in the figure given below. On the result page, you can see the following output parameters:

- Accuracy, F-score, Precision, Sensitivity, and Specificity are the model performance indicators displayed on the Results page.

- The Accuracy of the above Adaboost model is 0.7368, F-score is 0.7475, Precision is 0.7169, Sensitivity is 0.8143, and Specificity is 0.8814

On the same result page, you see the confusion matrix containing predicted and actual values for Category.

- The shaded diagonal cells show the correctly predicted categories. For example, 119 news (out of 140) in the business category are correctly predicted.

- The remaining cells indicate the wrongly predicted categories. For example, 3 news in the business category is wrongly predicted as the tech

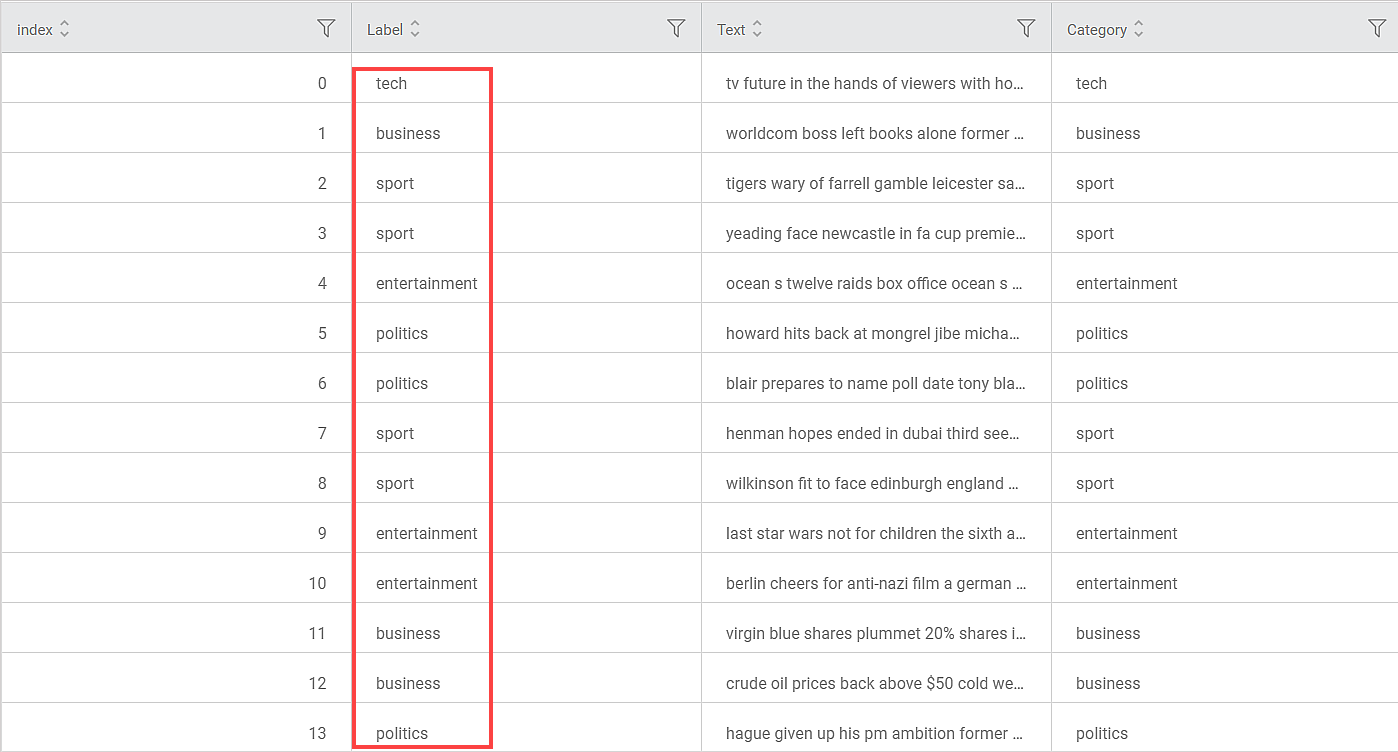

The figure below shows the resultant dataset in the Data tab.

- Along with the Index and Text columns, you can see the Category column showing the original categories.

- The predicted categories for each text are listed under the Label

- You can compare Label and Category columns to check correctly and wrongly predicted categories.

Related Articles

AdaBoost in Classification

You can find AdaBoost under the Machine Learning section in the Classification category on Feature Studio. Alternatively, use the search bar to find the AdaBoost algorithm. Use the drag-and-drop method or double-click to use the algorithm in the ...Classification

Notes: The Reader (Dataset) should be connected to the algorithm. Missing values should not be present in any rows or columns of the reader. To find out missing values in a data, use Descriptive Statistics. Refer to Descriptive Statistics. If missing ...Train Test Split

Train Test Split is located under Model Studio () under Sampling in Data Preparation, in the left task pane . Use the drag-and-drop method to use the algorithm in the canvas. Click the algorithm to view and select different properties for analysis. ...Train Test Split

Train Test Split is located under Model Studio ( ) under Sampling in Data Preparation, in the left task pane . Use the drag-and-drop method to use the algorithm in the canvas. Click the algorithm to view and select different properties for analysis. ...Naive Bayes

Naïve Bayes is located under Textual Analysis ( ) in Classification, in the left task pane. Use the drag-and-drop method to use the algorithm in the canvas. Click the algorithm to view and select different properties for analysis. Refer to Properties ...