Batch Processing

- Pipeline allows you to divide the dataset/Tasks into batches and then process it.

- Batch processing is mainly used to simplify many ETL operations like Missing value Imputation, expression, and validating data.

- You can specify the batch size called Chunk.

- Batch processing is used because it allows large volumes of data or tasks to be processed efficiently, automatically, and consistently—without requiring manual intervention for each record.

- This functionality is available in Pipelines.

Configuring Batch Processing

- Click on three dots () on the right-hand corner of the Workflow landing page. The batch processing option is displayed.

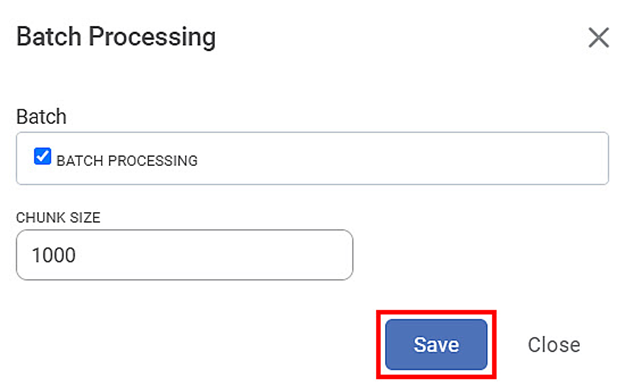

- Click on batch processing option, and the following popup is displayed.

- The default chunk size is 1000. The batches are created depending on the chunk size.

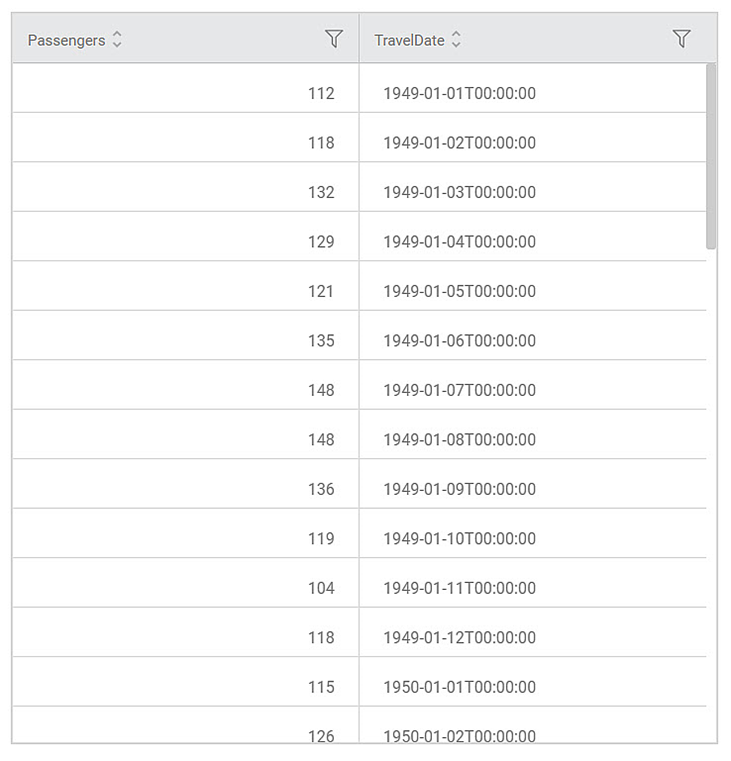

- The data page will be displayed as follows

- The records are processed as per mentioned batch size. When you explore the output, you will see all the processed data together.

- The data is processed internally in the batches and hence is applicable for the tasks wherein batch operations can be handled.

- The tasks which are not supporting batch execution will still execute with the regular execution.

Advantages

- Processing becomes faster. It improves the speed of many ETL operations like Missing value Imputation, expression, Cleansing, and model testing.

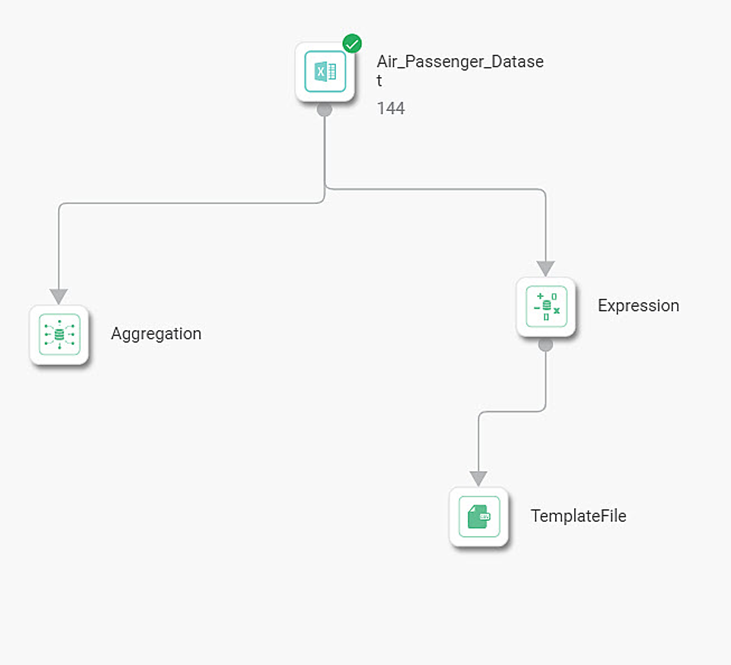

- The parallel processing becomes faster. Consider the following pipeline.

- Dataset reads the data from the file.

- You build an expression and save it in the output file in one process.

- In another process you build the Model.

- When you apply batch processing, both processes run concurrently.

- Following figure explains the parallel processing.

- You are allowed to explore the model when it is processing.

Limitations

- Apply Batch processing only on pipeline.

- Don’t apply Batch processing in the case of entire column operations like average, and totals.

- Batch processing is not allowed on the datasets generated using the following techniques

- SSAS RDBMS

- JSON file format

- Google news

Related Articles

Batch Processing

Working with Batches Workflow in Data Integrator allows you to divide the dataset into batches and then process it. Batch processing is mainly used to simplify many ETL operations like Missing value Imputation, expression, and validating data. You ...Generate Smart Insights with Text/Image Processing

Smart Data Insights - Dashboard Data vs External Text/Image: RubiAI allows you to generate Smart Insights wrt dashboard data and the uploaded Text/Image file. You can attach any file in the text formats- word, , csv, excel, pdf, text and image ...Introduction To Pipeline Elements (Know-How)

This guide explains the key interface components of the Rubiscape Pipeline and Workbook environment—Main Menu, Nodes, Properties Pane, Task Pane, Edit Mode, and View Mode. Understanding these elements helps users design, configure, and manage ...View and Edit Mode in Workbook/Workflow

Rubiscape allows you to access the Workbook/Workflow in two modes: View and Edit. View mode is available for anyone accessing a workbook/workflow. You can switch modes by selecting the mode option in the Function pane. The "Access Log" option within ...Understanding WorkFlow Canvas

The workflow canvas is the area where you can build algorithm flows. When you open a workflow, the following icons and fields are displayed. The workflow screen has four panes as given below. Task Pane: This pane displays the datasets and algorithms ...